Initialize your models, optimizers, and the Torch tensor and functional namespace according to theĬhosen opt_level and overridden properties, if any.Īmp.initialize should be called after you have finished initialize ( models, optimizers=None, enabled=True, opt_level='O1', cast_model_type=None, patch_torch_functions=None, keep_batchnorm_fp32=None, master_weights=None, loss_scale=None, cast_model_outputs=None, num_losses=1, verbosity=1, min_loss_scale=None, max_loss_scale=16777216.0 ) ¶ Therefore, the model weights themselvesĬan (and should) remain FP32, and there is no need to maintain separate FP32 master weights. Out-of-place on the fly as they flow through patched functions. Data, activations, and weights are recast

O1 inserts castsĪround Torch functions rather than model weights. The override master_weights=True does not make sense. For example, selecting opt_level="O1" combined with If you attempt to override a property that does not make sense for the selected opt_level,Īmp will raise an error with an explanation. After selecting an opt_level, you can optionally pass property Dynamic loss scale adjustments are performed by Amp automatically.Īgain, you often don’t need to specify these properties by hand. If loss_scale is the string "dynamic", adaptively adjust the loss scale over time. Loss_scale: If loss_scale is a float value, use this value as the static (fixed) loss scale. FP32 master weights are stepped by the optimizer to enhance precision and capture small gradients. Master_weights: Maintain FP32 master weights to accompany any FP16 model weights. Keep_batchnorm_fp32: To enhance precision and enable cudnn batchnorm (which improves performance), it’s often beneficial to keep batchnorm weights in FP32 even if the rest of the model is FP16.

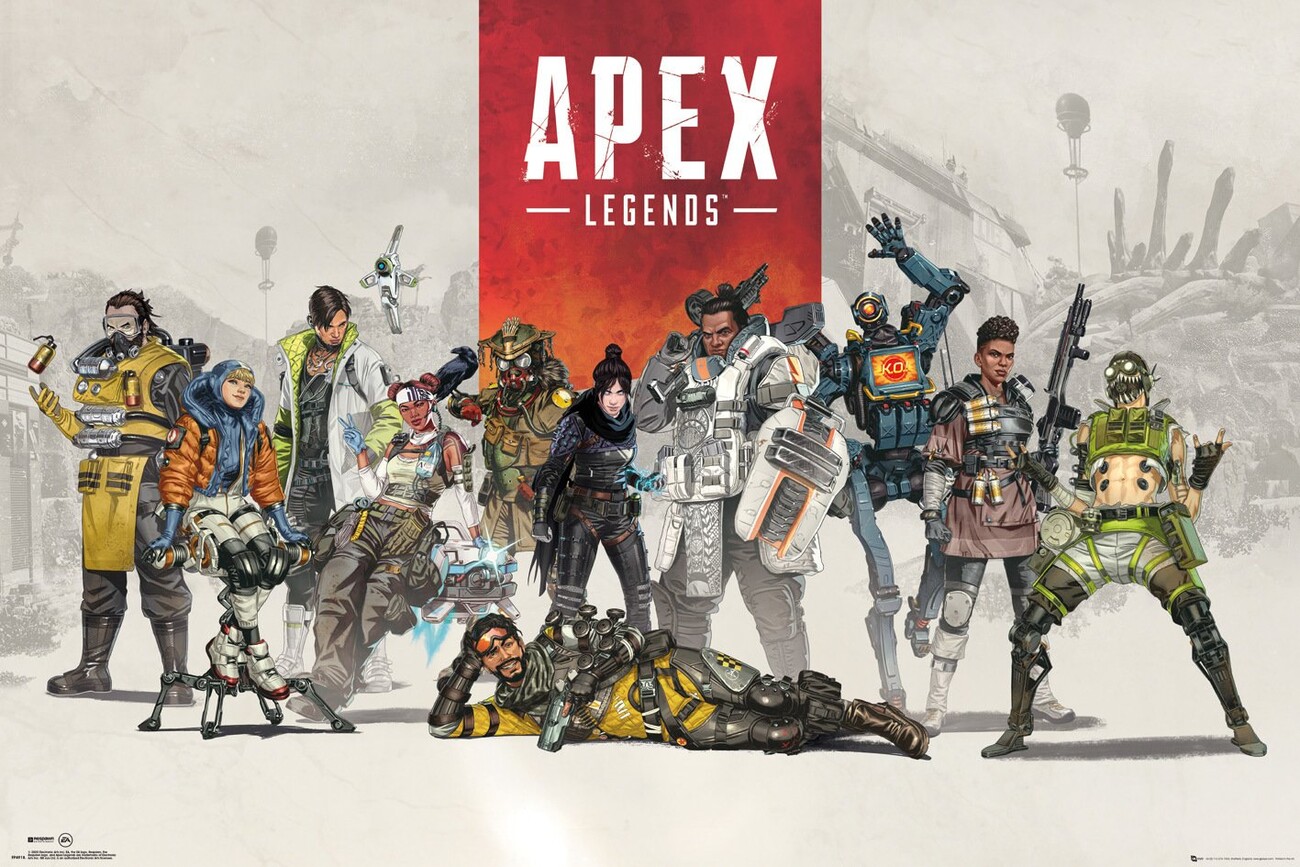

APEX PATCH

Patch_torch_functions: Patch all Torch functions and Tensor methods to perform Tensor Core-friendly ops like GEMMs and convolutions in FP16, and any ops that benefit from FP32 precision in FP32. Specifically, summit implies the topmost level attainable.Currently, the under-the-hood properties that govern pure or mixed precision training are the following:Ĭast_model_type: Casts your model’s parameters and buffers to the desired type. The words summit and apex are synonyms, but do differ in nuance. The pinnacle of worldly success When would summit be a good substitute for apex?

The synonyms pinnacle and apex are sometimes interchangeable, but pinnacle suggests a dizzying and often insecure height. The words peak and apex can be used in similar contexts, but peak suggests the highest among other high points.Īn artist working at the peak of her powers In what contexts can pinnacle take the place of apex? The culmination of years of effort When is peak a more appropriate choice than apex? The meanings of culmination and apex largely overlap however, culmination suggests the outcome of a growth or development representing an attained objective.

APEX SERIES

The war was the climax to a series of hostile actions When could culmination be used to replace apex? However, climax implies the highest point in an ascending series. In some situations, the words climax and apex are roughly equivalent. While the synonyms acme and apex are close in meaning, acme implies a level of quality representing the perfection of a thing.Ī statue that was once deemed the acme of beauty Where would climax be a reasonable alternative to apex? The apex of Dutch culture When might acme be a better fit than apex? While all these words mean "the highest point attained or attainable," apex implies the point where all ascending lines converge. Some common synonyms of apex are acme, climax, culmination, peak, pinnacle, and summit. Frequently Asked Questions About apex How does the noun apex differ from other similar words?

0 kommentar(er)

0 kommentar(er)